Patterns and Anti-Patterns for Building with LLMs

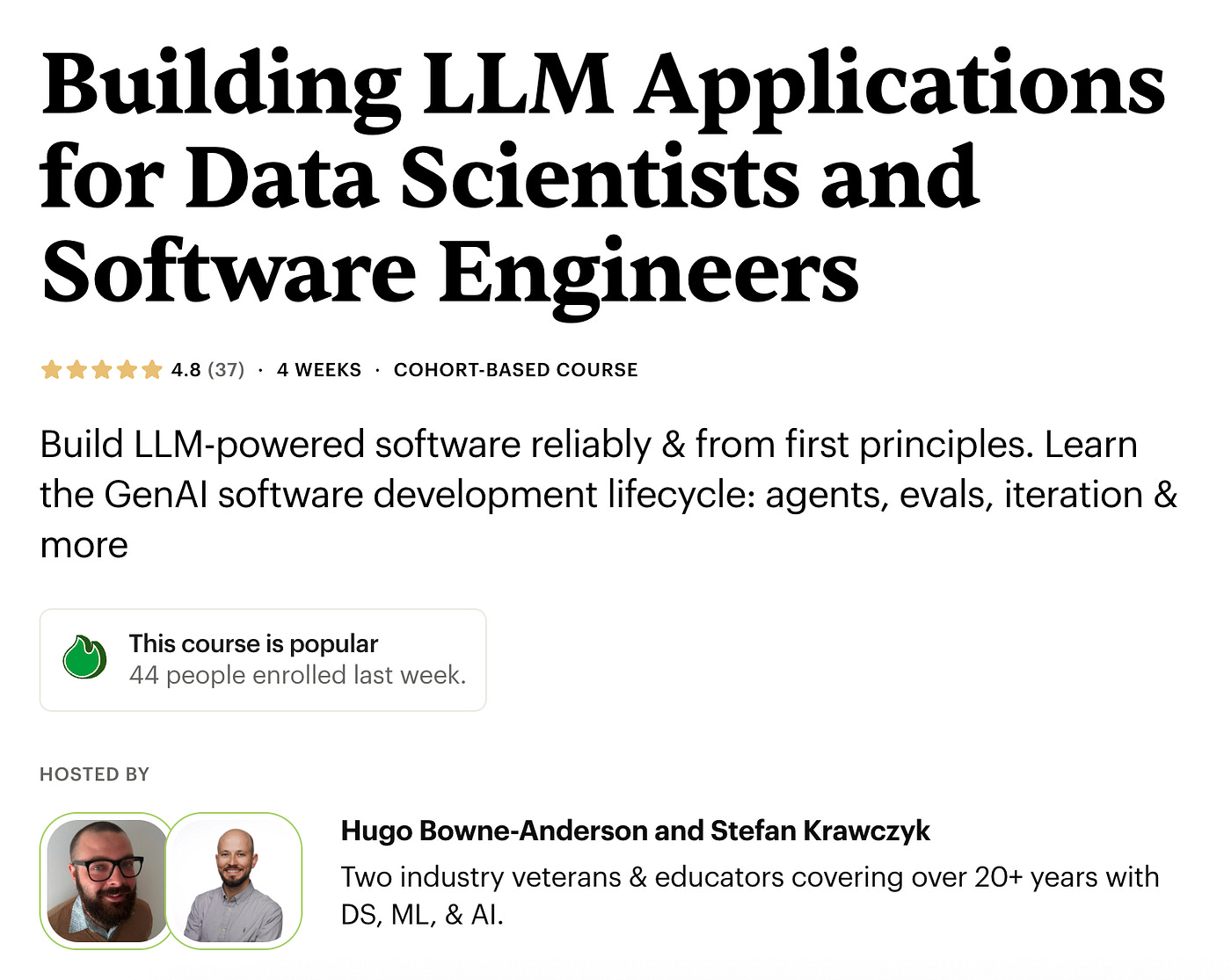

A bit about our guest author: Hugo Bowne- Anderson advises and teaches teams building LLM-powered systems, including engineers from Netflix, Meta, and the United Nations through my course on the AI software development lifecycle. It covers everything from retrieval and evaluation to agent design and all the steps in between. Use the code MARVELOUS25 for 25% off.

In a recent Vanishing Gradients podcast, I sat down with John Berryman, an early engineer on GitHub Copilot and author of Prompt Engineering for LLMs.

We framed a practical discussion around the “Seven Deadly Sins of AI App Development,” identifying common failure modes that derail projects.

For each sin, we offer a “penance”: a clear antidote for building more robust and reliable AI systems.

You can also listen to this as a podcast:

👉 This was a guest Q&A from our July cohort of Building AI Applications for Data Scientists and Software Engineers. Enrolment is open for our next cohort (starting November 3).👈

Sin 1: Demanding 100% Accuracy

The first sin is building an AI product with the expectation that it must be 100% accurate, especially in high-stakes domains like legal or medical documentation [00:03:15]. This mindset treats a probabilistic system like deterministic software, a mismatch that leads to unworkable project requirements and potential liability issues.

The sin is requiring these AI systems to be more accurate than you would require a human to be.

The Solution/Penance:

Reframe the problem. The goal is not to replace human judgment but to save users time.

Design systems that make the AI’s work transparent, allowing users to verify the output and act as the final authority.

By setting the correct expectation, that the AI is a time-saving assistant, not an infallible oracle, you can deliver value without overpromising on reliability [00:04:30].

Sin 2: Granting Agents Too Much Autonomy

This sin involves giving an agent a large, complex, closed-form task and expecting a perfect result without supervision [00:07:20]. Due to the ambiguity of language and current model limitations, the agent will likely deliver something that technically satisfies the request but violates the user’s implicit assumptions. This is particularly ineffective for tasks with a specific required solution, though it can be useful for open-ended research.

The Solution/Penance:

Keep the user in the loop. Break down large tasks into smaller steps and build in opportunities for the agent to ask clarifying questions.

Make the agent’s process and intermediate steps visible to the user.

This collaborative approach narrows the solution space and ensures the final output aligns with the user’s actual intent [00:09:10].

Sin 3: Irresponsible Context Stuffing

With ever-larger context windows, the temptation is to simply “shove it all into the prompt” and perform RAG over the full content [00:11:45]. This approach degrades model accuracy, as critical information gets lost in the noise. It also increases cost and latency without a corresponding improvement in performance.

The Solution/Penance:

Practice responsible “context engineering.”

Instead of brute-forcing the context window, first lay out all potential information sources.

Then, create a relevance-ranking algorithm to tier the context, ensuring only the most vital information makes it into the prompt.

Build a template that fits within a sensible token budget, not the maximum window size, ensuring the final context is lean, readable, and effective [00:13:20].

Sin 4: Starting with Multi-Agent Complexity

A common mistake is to jump directly to a complex, orchestrated multi-agent framework for a problem that may not require it [00:17:10]. This introduces significant overhead, creates hard-to-debug handoffs between agents, and often results in a system that underperforms a simpler, single-agent alternative.

The Solution/Penance:

Start with the simplest viable solution.

Build a single-prompt, single-model system first to understand its failure modes and limitations.

Only when the pain of the simple approach becomes clear should you incrementally add complexity.

This process ensures that if a multi-agent system is truly needed, its design is informed by real-world constraints rather than assumptions [00:19:15].

Sin 5: Treating RAG as a Black Box

Many developers adopt off-the-shelf RAG frameworks, treating them as a single, monolithic component [00:20:45]. When the system fails, this black-box approach makes it nearly impossible to diagnose whether the fault lies in the data ingestion, the retrieval step, or the generation model.

The Solution/Penance:

Build your own RAG system, and consider the individual pieces of the pipeline: indexing, query creation, retrieval, and generation.

By isolating these components, you can debug them independently.

If the generated answer is wrong, you can inspect the retrieved context to see if the issue is poor retrieval.

If the context is good but the answer is bad, the problem lies with the generation prompt or model.

This separation is key to building a reliable and maintainable system [00:22:10].

Sin 6: Overloading a Single Prompt

This sin is the smaller-scale version of giving agents too much autonomy: trying to pack too many distinct tasks into a single LLM request [00:26:25]. Asking a model to extract multiple, unrelated pieces of information or perform several judgments at once often leads to it hallucinating, missing tasks, or producing low-quality output as its attention is divided.

The Solution/Penance:

Break the problem into smaller, atomic pieces.

Instead of one large prompt asking for everything, use several smaller, focused prompts, which can even be run in parallel.

For example, rather than asking a model to “find all hallucinations,” iterate through each fact and ask, “Is this statement supported by the source? True or False.”

This trades a small amount of latency for a large gain in accuracy and reliability [00:28:10].

Sin 7: Chasing Frameworks and Logos

The AI ecosystem is an explosion of new tools, SDKs, and models, creating a temptation to constantly search for the perfect off-the-shelf solution [00:29:15]. Over-indexing on third-party frameworks too early can lead to vendor lock-in, deep and opaque stack traces, and technical debt when the ecosystem inevitably shifts.

The Solution/Penance:

Dig in and build essential components yourself, at least initially. Understand that at its core, an LLM call is just an HTTP request.

For domain-specific needs like evaluation or human review, a simple, custom-built tool is often more effective than a generic platform.

Adopt frameworks mindfully, pick one that solves a clear problem, and anticipate that you will still need to write and rewrite code as your needs evolve [00:31:10].

A Summary of Penances

Accuracy: Reframe the goal. Instead of trying to make AI 100% accurate, make it a way to augment your users and save them time.

Oversight: When working with complex workflows, keep the user in the loop and break down large agentic tasks into smaller, supervised steps.

Context: Avoid model distraction by selectively curating context. Rank context by relevance and select only the most relevant information.

Complexity: Start with the simplest possible solution before adding multi-agent complexity.

RAG: In order to facilitate easy debugging, design RAG as a transparent pipeline of indexing, query generation, retrieval, and generation.

Prompts: Improve accuracy by decomposing complex multi-part prompts into several prompts that are easier for the model to process.

Frameworks: Build core components yourself and adopt third-party tools mindfully, anticipating change.

Conclusion

The path to building reliable AI applications is paved with a deeper understanding of the models themselves.

John Berryman offers a powerful mental model:

empathize with the LLM by treating it as a “super intelligent, super eager, and a forgetful AI intern” [00:34:10]. This intern is brilliant but not psychic, gets distracted easily, and wakes up every morning (with every API call) with no memory of the past.

This perspective shifts the focus from finding the perfect tool to designing robust, debuggable systems that work with the model’s nature, not against it.

Want to go deeper? I teach a course on the entire AI software development lifecycle (use code MARVELOUS25 for 25% off), covering everything from retrieval and evaluation to observability, agents, and production workflows. It’s designed for engineers and teams who want to move fast without getting stuck in proof of concept purgatory.

If you’d like a taste of the full course, I’ve put together a free 10-part email series on building LLM-powered apps. It walks through practical strategies for escaping proof-of-concept purgatory: one clear, focused email at a time.

Copyrights: The article was originally published on the Vanishing Gradients blog.